Abstract

These days I had opportunity of reading some papers about finance data analysis, meaning credit score, default rate and so on. Personally, I want to tackle with cutting-edge way as soon as possible. But, it is important to see from basic flow on this kind of case. So, here, on this article, I'll follow the basic work flow like univariate analytics with Logistic Regression.To focus on basic flow and some characteristics, I'll ignore some manner to the data and modeling.

This article more or less follows the chapter 2 and 3 of the following article.

Data

As far as I checked, the number of data sets for finance area is limited. I'll use the one that we can get on Kaggle. One of the huge advantages of using free data set of Kaggle is that we can see and check the kernels the other people posted. From the link below, you can download the data set.Work Flow

I'll follow the flow below.- check data

- pre-processing

- make Logistic Regression model with one specific variable

- do simple univariate analytics

- make Logistic Regression model with all the variables

Code

check data

At first, load and check the data.import pandas as pd

# load data

data_orig = pd.read_csv("../data/UCI_Credit_Card.csv")

Actually, we need to care about the data in good manner, meaning the precise background of each variables, characteristics, distributions and so on. Here, I'll just skip those for article simplicity.

Generally, as characteristics of data about credit score and default rate, we can say the following points.

- When we think default rate as explained variable, the data tend to be imbalanced.

- Missing values are not big problem.

About missing values, it is just because of the process that the information is attained. Customers who provided wrong or missing information don’t receive credit.

If we check the default rate, more or less twenty percent is default payment.

print("data size: " + str(data_orig.shape))

print("default size: " + str(data_orig.ix[data_orig['default.payment.next.month'] == 1,:].shape))data size: (30000, 25)

default size: (6636, 25)pre-processing

I'll do simple pre-processing before making models. Basically, ID is not the data we should drop without consideration and investigation. But, here, I'll drop. And, I'll do one-hot-encoding to categorical data.# omit-target columns

omit_target_label = ['ID']

# categorical columns

pay_label = ['PAY_'+str(i) for i in range(0,7) if i != 1]

categorical_label = ['SEX', 'EDUCATION', 'MARRIAGE']

categorical_label.extend(pay_label)

dummied_columns = pd.get_dummies(data_orig[categorical_label].astype('category'))

# drop columns

data_orig = data_orig.drop(columns=categorical_label)

data_orig = data_orig.drop(columns=omit_target_label)

# merge one-hot-encoded columns

data = pd.concat([data_orig, dummied_columns], axis=1, join='outer')About continuous variables, we can group numerical variables into intervals. By this, with sacrificing some information, we can get interpretability and all the variables are dealt with in same manner. On this article, I'll deal with continuous variables as they are.

make Logistic Regression model with one specific variable

By using one of the variables, I'll make a Logistic Regression model. Later, I'll adapt this process to all the variables one by one.The first step is to split the data into train and test data.

from sklearn.model_selection import train_test_split

# explaining and explained

target = data['default.payment.next.month']

data = data.drop(columns=['default.payment.next.month'])

# split data into train and test

x_train, x_test, y_train, y_test = train_test_split(data, target, test_size = 0.33)By using one explaining variable, train a Logistic Regression model.

from sklearn.linear_model import LogisticRegression

univar = x_train[['BILL_AMT4']]

lr = LogisticRegression()

lr.fit(univar, y_train)sklearn has roc_auc_score() for evaluation.

from sklearn.metrics import roc_auc_score

import numpy as np

predicted_score = np.array([score[1] for score in lr.predict_proba(x_test[['BILL_AMT4']])])

roc_auc_score(y_test.values, predicted_score)0.4965684683473838do simple univariate analytics

I'll adapt the process above to all the variables one by one. If the data had the TIME information, I could separate the data into some groups based on the TIME and check the robustness by predicting each group’s value.explaining_labels = x_train.columns

auc_outcomes = {}

for label in explaining_labels:

univar = x_train[[label]]

lr = LogisticRegression()

lr.fit(univar, y_train)

predicted_score = np.array([score[1] for score in lr.predict_proba(x_test[[label]])])

auc_outcomes[label] = roc_auc_score(y_test.values, predicted_score)

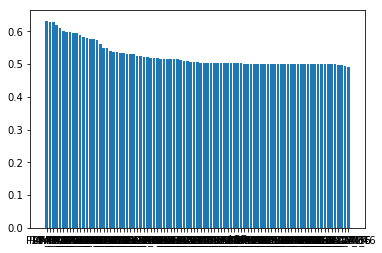

By visualizing the AUC scores, we can see the univariate models of each variables.

%matplotlib inline

import matplotlib.pyplot as plt

label = []

score = []

for item in sorted(auc_outcomes.items(), key=lambda x: x[1], reverse=True):

label.append(item[0])

score.append(item[1])

plt.bar(label, score)

make Logistic Regression model with all the variables

Next, by using all the explaining variables, I'll make the Logistic Regression model. In a good manner, I should stick to the combination of variables and other factors. But, here, I don't do it. At first, even without standardization, I'll make a model.# using all the explaining variables

lr = LogisticRegression()

lr.fit(x_train, y_train)

predicted_score = np.array([score[1] for score in lr.predict_proba(x_test)])

roc_auc_score(y_test.values, predicted_score)

0.6511863475572789As an example, I'll also show brier score loss.

from sklearn.metrics import brier_score_loss

brier_score_loss(y_test.values, predicted_score)

0.1645656857859405By using the model, I'll predict the scores to train data and test data. By changing the rate of default, I can make some types of data set like 20% default rate data set, 50% default rate data set. By checking the scores of those data sets, I can see the robustness. But, I don’t do here. By checking scores with some evaluation methods to some types of data set, we can evaluate the robustness of the model.

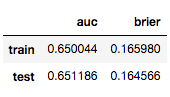

Here, just to train and test data, by auc and brier, I'll check.

predicted_score_train = np.array([score[1] for score in lr.predict_proba(x_train)])

predicted_score_test = np.array([score[1] for score in lr.predict_proba(x_test)])

auc_train = roc_auc_score(y_train.values, predicted_score_train)

auc_test = roc_auc_score(y_test.values, predicted_score_test)

brier_train = brier_score_loss(y_train.values, predicted_score_train)

brier_test = brier_score_loss(y_test.values, predicted_score_test)

auc = [auc_train, auc_test]

brier = [brier_train, brier_test]

pd.DataFrame({'auc': auc, 'brier': brier}, index=['train', 'test'])

appendix

Until now, because the purpose of this article is just to follow the workflow of simple analysis, I didn't do even standardization of the data. Just in case, I'll show how auc score changes with standardization.from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

pipe_lr = Pipeline([

('sc', StandardScaler()),

('lr', LogisticRegression())

])

pipe_lr.fit(x_train, y_train)

predicted_score_sc = np.array([score[1] for score in pipe_lr.predict_proba(x_test)])

roc_auc_score(y_test.values, predicted_score_sc)

0.768681648183735Check the scores by cross validation.

from sklearn.cross_validation import cross_val_score

pipe_lr = Pipeline([

('sc', StandardScaler()),

('lr', LogisticRegression())

])

scores = cross_val_score(estimator=pipe_lr,

X=x_train,

y=y_train,

cv = 10,

scoring='roc_auc')

print(scores)

[0.77526648 0.74356037 0.76435687 0.7648575 0.77505218 0.76580115

0.78112977 0.74257995 0.75616341 0.7857274 ]