Overview

On this article, I’ll write down the note about the example of tf.variable_scope(), meaning how to arrange the graph for TensorBoard.The target code is from the article below.

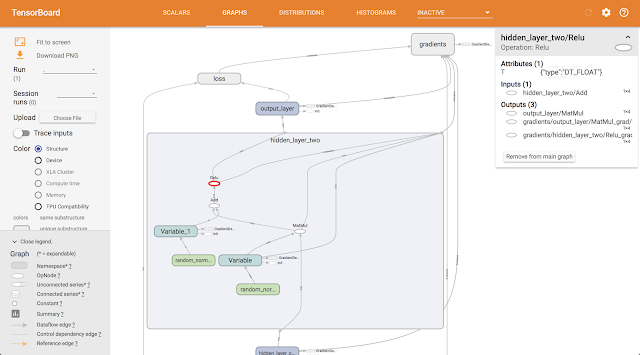

On a tensorboard, without using namespace, the graph information becomes complex. Namespace will solve the problem and makes it easy to debug.

The example of tf.variable_scope

From the code doc,This context manager validates that the (optional)Partially, I’ll show the example of the usage of tf.variable_scope. By this, on a TensorBoard graph, the part of “data” is separately shown.valuesare from the same

graph, ensures that graph is the default graph, and pushes a name scope and a

variable scope.

# placeholder

with tf.variable_scope('data'):

x_data = tf.placeholder(shape=[None, 3], dtype=tf.float32)

y_target = tf.placeholder(shape=[None, 1], dtype=tf.float32)By the same way, we can set the namespaces for each layer of the neural network.

TensorBoard GRAPHS

The whole code is on the bottom of this article. After executing code, we can get access to TensorBoard by http://localhost:6006. Because I didn’t set the random number seed, the behavior of the loss is not consistent. But the graph part is same. So this time please don’t care.Anyway, the graph is like this.

It is arranged well by namespaces per layers. When you want to check the specific layer, click the layer. You can check the layer.

Whole code

from sklearn import datasets

iris = datasets.load_iris()

print(iris.data[:5])

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

# set random number

seed = 42

tf.set_random_seed(seed)

np.random.seed(seed)

# data

iris = datasets.load_iris()

x = np.array([x[0:3] for x in iris.data])

y = np.array([x[3] for x in iris.data])

x_train, x_test, y_train, y_test = train_test_split(x, y, train_size=0.7)

# normalization

mms = MinMaxScaler()

x_train = mms.fit_transform(x_train)

x_test = mms.fit_transform(x_test)

batch_size = 50

# placeholder

with tf.variable_scope('data'):

x_data = tf.placeholder(shape=[None, 3], dtype=tf.float32)

y_target = tf.placeholder(shape=[None, 1], dtype=tf.float32)

# parameters

with tf.variable_scope('hidden_layer_one'):

W1 = tf.get_variable('weight_1', [3, 6], initializer=tf.random_normal_initializer())

b1 = tf.get_variable('bias_1', [6], initializer=tf.random_normal_initializer())

hidden_1 = tf.nn.relu(tf.add(tf.matmul(x_data, W1), b1))

with tf.variable_scope('hidden_layer_two'):

W2 = tf.get_variable('weight_2', [6, 4], initializer=tf.random_normal_initializer())

b2 = tf.get_variable('bias_2', [4], initializer=tf.random_normal_initializer())

hidden_2 = tf.nn.relu(tf.add(tf.matmul(hidden_1, W2), b2))

with tf.variable_scope('output_layer'):

W3 = tf.get_variable('weight_3', [4, 1], initializer=tf.random_normal_initializer())

b3 = tf.get_variable('bias_3', [1], initializer=tf.random_normal_initializer())

output = tf.nn.relu(tf.add(tf.matmul(hidden_2, W3), b3))

# loss

with tf.variable_scope('loss'):

loss = tf.reduce_mean(tf.square(y_target - output), name='loss')

with tf.name_scope('summaries'):

tf.summary.scalar('loss', loss)

tf.summary.histogram('histogram loss', loss)

summary_op = tf.summary.merge_all()

# optimize

optimizer = tf.train.GradientDescentOptimizer(0.005)

train_step = optimizer.minimize(loss)

with tf.Session() as sess:

# initialize variables

init = tf.global_variables_initializer()

sess.run(init)

# visualize

writer = tf.summary.FileWriter('./graph', sess.graph)

train_loss = []

test_loss = []

for i in range(300):

# index for training

random_index = np.random.choice(len(x_train), size=batch_size)

# prepare data

random_x = x_train[random_index]

random_y = np.transpose([y_train[random_index]])

_, temp_loss, summary = sess.run([train_step, loss, summary_op], feed_dict={x_data: random_x, y_target: random_y})

writer.add_summary(summary, global_step=i)

if i % 50 == 0:

print(str(i) + ':' + str(temp_loss))

if i == 300 - 1:

pred = sess.run(output, feed_dict={x_data: x_test})

pred_list = [x[0] for x in pred]

print(np.transpose(np.array([y_test, pred_list])))