On the following articles, I wrote about kNN. But although I myself don’t know the reason, I’ve never touched the simplest usage of kNN, meaning how to use kNN of sklearn’s library.

- kNN by Golang from scratch

- How to write kNN by TensorFlow

- Speed up naive kNN by the concept of kmeans

- CNN + KNN model accuracy

Overview

The most prevalent way to use machine learning algorithms is to use libraries. Of course you can make models by from-scratch way or by other tools like Tensorflow. But if you are not in the situation that prevalent machine learning libraries don’t fulfill what you want, you should use the libraries as possible as you can.

On this article, we check how to use sklearn’s kNN.

kNN algorithm

Concisely kNN is like following.

kNN algorithm is very simple. When it predicts data label, it takes the nearest k labels and by majority rule, the label of data is predicted.

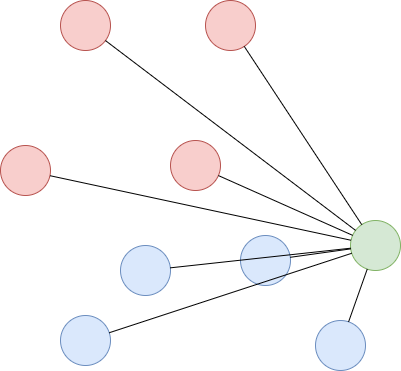

On the case of image above, the green circle means the prediction target and the other two colors mean the classes the circles belong to. On kNN algorithm, it takes nearest k circles from green circle and check the color of the picked circles.

About detail, please read kNN by Golang from scratch.

Reference

Basically, when we use machine learning libraries, the best reference is the official document.

scikit learn’s official document has many examples to use. I suggest you read that.

This article is only about simple usage. But when you practically use machine learning algorithm. Only knowledge about how to code is not enough. The book below is useful to learn about how to make machine learning model in proper way.

Simplest example

I’ll show the simplest example. The code below is to make classification model by iris data.

from sklearn import datasets

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# data preparation

iris = datasets.load_iris()

X = iris.data

y = iris.target

# split data into train and test

x_train, x_test, y_train, y_test = train_test_split(X, y, train_size=0.7)

# make model

knn = KNeighborsClassifier(n_neighbors=3)

knn.fit(x_train, y_train)

# check accuracy

print(accuracy_score(y_test, knn.predict(x_test)))

0.977777777778As you can see, only few lines are enough to make model.

# make model

knn = KNeighborsClassifier(n_neighbors=3)

knn.fit(x_train, y_train)On this part, I set the parameter, n_neighbors=3, which means k nearest points from the prediction target.

About optional parameters

Although kNN is very simple algorithm, it has some extensional points.

- Which distance?

- Which weights for class selection?

This algorithm uses distance method. Of course, there are some types of distance methods and we can choose.

The weights for class selection is not so intuitive. Let’s think about the example below.

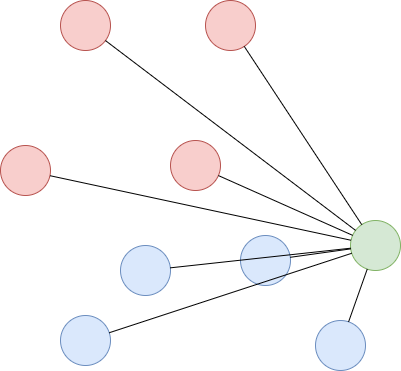

On the image above, there are two class, red and blue. Now you try to predict the class of the green circle. At first kNN model gets the calculates the distances between the green circle and other circles and picks up k nearest circles. Here, I set 3 on k. So, the nearest 3 circles are two blue and one red. Naively, from the viewpoint of majority rule, kNN algorithm judge the green circle as blue.

But for example on this case, the model can have the information of distances. Even after the model picked up k circles, those circles have different importances from the viewpoint of the distance from the target circle, green circle, meaning the distance information can be weights to each picked circle.

By optional parameters, we can change those parameters.