Abstract

This article covers the basic understanding and coding of Inception module.GoogLeNet, which is composed by stacking Inception modules, achieved the state-of-the-art in ILSVRC 2014. And probably, many people already touched the models which have the name “Inception” by fine-tuning. Here, on this article, I'll deal with the Inception module.

To write the model, I'll use Keras with Python.

To deepen your knowledge, you can use the following paper.

Inception module

Structure of Convolutional Neural Network

Before tackling with Inception module, I'll roughly touch the structure of CNN(Convolutional Neural Network) itself. CNN is composed of convolutional layer and dense layer, although to say precisely input, output layers and pooling layers should be added. And about network architecture, we need to care about the number of layers and nodes.

On the image above, the red circles express the nodes. The blue rectangle expresses one layer. When we make CNN model, we need to think about those of convolutional and dense layer.

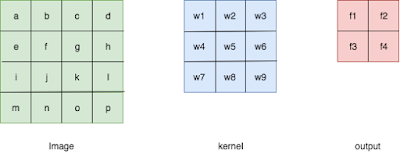

From here, let’s focus on convolutional layer. Assume there is an image like below. The height and width are four.

When we adapt convolution to this, we will choose the kernel size. The example below is the kernel with the size (3, 3) with stride (1,1).

On the context of convolutional neural network, the parameters w1 ~ w9 are the targets to update.

As you can see, by the size of kernel, the outcome of the convolutional network changes.

Inception module

The concept of Inception module focuses on the kernel size. Basically, the proper kernel size depends on the image data sets. Inception module uses three types of kernel inside at once. The following image is from the paper, https://arxiv.org/abs/1409.4842. This shows the architecture of the Inception module.

Concept of Inception module

As I wrote, when we make a convolutional neural network, we need to care about layers and nodes of convolutional and dense layers. About convolutional layer, we can choose the size of kernel.The concept of Inception module focuses on the kernel size of convolutional layers. Basically, when we use convolutional layer, we choose the kernel size like (3,3) and (5,5). Inception module use those at once. About the detail, I'll recommend that you read the paper, https://arxiv.org/abs/1409.4842.

Code

On the image from https://arxiv.org/abs/1409.4842, there are two types of Inception modules, naive version and Inception module with dimension reduction. I'll write those two modules with Keras. After that, by embedding those, I'll make simple models.I'll use mnist data set for training. So, at first, I'll prepare the data set. The original data set is bit big.(You can imagine that my PC environment is pretty hard for experiment. Seven months past from my arrival at Brazil. Probably, it is high time to organize my PC environment as machine learning engineer.) So, I'll use first two thousand points.

from keras.datasets import mnist

from keras.utils import to_categorical

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.reshape((60000, 28, 28, 1))[0:2000]

y_train = to_categorical(y_train, 10)[0:2000]

The naive_inception() expresses naive version of Inception module. Three types of kernels are used and concatenated. With Keras, we can write that really easily.

import keras

from keras.layers import Dense, Input, Conv2D, Flatten, concatenate

from keras.models import Model

def naive_inception(inputs):

towerOne = Conv2D(6, (1,1), activation='relu', border_mode='same')(inputs)

towerTwo = Conv2D(6, (3,3), activation='relu', border_mode='same')(inputs)

towerThree = Conv2D(6, (5,5), activation='relu', border_mode='same')(inputs)

x = concatenate([towerOne, towerTwo, towerThree], axis=3)

return x

Next, Inception module with dimension reduction. We can write this just by following the architecture the image above showed.

def dimension_reduction_inception(inputs):

tower_one = MaxPooling2D((3,3), strides=(1,1), padding='same')(inputs)

tower_one = Conv2D(6, (1,1), activation='relu', border_mode='same')(tower_one)

tower_two = Conv2D(6, (1,1), activation='relu', border_mode='same')(inputs)

tower_two = Conv2D(6, (3,3), activation='relu', border_mode='same')(tower_two)

tower_three = Conv2D(6, (1,1), activation='relu', border_mode='same')(inputs)

tower_three = Conv2D(6, (5,5), activation='relu', border_mode='same')(tower_three)

x = concatenate([towerOne, towerTwo, towerThree], axis=3)

return x

The both types of Inception modules are easily prepared. We can make model by stacking those modules. Here, I'll make very simple models. Although on the process of training model, we can see the accuracy to the data set, the accuracy doesn't mean the superiority of one module to the another.

The following model uses one naive version of Inception module.

def naive_model(x_train):

inputs = Input(x_train.shape[1:])

x = naive_inception(inputs)

x = Flatten()(x)

x = Dense(64, activation='relu')(x)

predictions = Dense(10, activation='softmax')(x)

model = Model(input=inputs, output=predictions)

model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.SGD(lr=0.0001),

metrics=['accuracy'])

return model

modelA = naive_model(x_train)

modelA.fit(x_train, y_train, epochs=50, shuffle=True, validation_split=0.1)

By visualizing the training, we can observe how the trining went on.

%matplotlib inline

import matplotlib.pyplot as plt

def show_history(history):

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train_accuracy', 'test_accuracy'], loc='best')

plt.show()

show_history(modelA.history)

To the dimension reduction version, we can do same things.

def dimension_reduction_model(x_train):

inputs = Input(x_train.shape[1:])

x = dimension_reduction_inception(inputs)

x = Flatten()(x)

x = Dense(64, activation='relu')(x)

predictions = Dense(10, activation='softmax')(x)

model = Model(input=inputs, output=predictions)

model.compile(loss=keras.losses.categorical_crossentropy,

optimizer=keras.optimizers.SGD(lr=0.0001),

metrics=['accuracy'])

return model

modelB = naive_model(x_train)

modelB.fit(x_train, y_train, epochs=50, shuffle=True, validation_split=0.1)

Visualizing it.

show_history(modelB.history)